Insurers seek to close the engagement gap with consumers and clients. They prioritize digital transformation as a means to achieve a superior digital customer experience. And those transformations are powered by data.

Life insurance carriers are treasure troves of data, housing millions of policies and client documents accumulated over decades. Today, carriers see the potential in leveraging that data. They see it as a strategic asset that could enhance their company’s ability to generate business insights, quickly create better targeted products, and deliver accelerated, personalized digital experiences for advisors and clients.

However, many insurers have not yet been able to realize the full potential of their data.

A common problem is that data doesn’t move freely within the independent life insurance channel. It can be siloed at various points between both carriers and distributors. Producers/Advisors find themselves having to access multiple sources to uncover the full picture of their clients’ coverage(s), investments and personal data. All of this data is required to provide a complete 360° view of the end consumer.

It becomes VERY difficult and costly to realize the benefits of data as a key strategic asset if you can’t easily and automatically access it, have it presented in a usable format, in a timely fashion for the purposes needed.

The key to unlocking this foundational data lies in planning and executing a holistic data management program that includes data aggregation, transformation (cleansing, normalization, standardization), and strategies for data integration and migration.

How are Life Insurance Carriers Getting Value from their Data? #

Gaining access to all of its data presents an opportunity to gain a significant competitive edge for a life insurance carrier. A Celent study, commissioned by Equisoft, explored how insurers are creating value with their data and found that there is no area of the insurance business currently untouched by data.

Rich and meaningful access to data also allows insurers to ask questions of themselves that were previously not possible. From risk assessment and underwriting to product development, policy/distribution channel administration, and claims management, data plays a pivotal role in shaping strategies, driving operational efficiency, and optimizing business performance.

Carriers are leveraging data to improve customer understanding, segmentation and targeting #

Data has been critical in helping life insurers gain a deeper understanding of their customers. It helps provide decision-makers with more transparent, granular information on customer preferences, goals, and where each consumer is in the lifecycle journey. Overall, data is the most critical driver of successful customer understanding and prospecting efforts, creating opportunities for cross-selling initiatives, conservation efforts (keeping long-term customers), and chances to deepen client relationships.

By feeding data through advanced analytics and AI, insurers can go from surface-level information of a customer’s demographics‒simple form data like age and location‒to a richer and more meaningful understanding of their needs‒based on combinations of data sets that reveal customer intent, attitudes towards risk and reactions to live events. This deep interpretation enables insurers to develop a deeper understanding of their customers and tailor their offerings and experiences in a way that will truly resonate.

Carriers are leveraging customer feedback to enhance operational efficiency #

Carriers are tapping into the wealth of available data to bridge the gap between customer perceptions and operational realities, redefining strategies, and gaining valuable insights into customer preferences. By analyzing customer feedback alongside internal data, carriers can differentiate between perception and reality, gaining crucial insights into how they might streamline operations or improve products to alleviate customer pain points.

For example, customer feedback can help carriers understand why a product is not performing as expected. Are sales low because the product is poorly designed, not a good fit for the intended market or because consumers don’t understand the product’s value because the features and benefits are poorly communicated? Product ratings, chatbots, exit surveys or call center discussions can reveal whether the insurer should seek to improve communication and messaging or make adjustments to the product.

Customer feedback plays a vital role in shaping new business strategies. Data insights obtained from customer feedback often unveil valuable information that challenges conventional wisdom.

A noteworthy example is a recent study that demonstrated how customers prioritize the long-term viability of a brand over price when shopping for life insurance.

This finding contradicts the prevailing notion that price is the ultimate deciding factor. Such insights highlight the importance of delving deeper into customer preferences and aligning strategies accordingly, rather than relying solely on preconceived notions about customer priorities.

Furthermore, while traditional measures of customer satisfaction, like the Net Promoter Score (NPS), remain useful, carriers now have access to an abundance of additional data sources that accurately gauge the effectiveness of customer experience and engagement efforts. Chatbots, robo-advisors, online ratings, social media interactions, and surveys all contribute to a wealth of data revealing customer attitudes toward the companies they engage with.

Analysis of all of this data can reveal customer pain points, including steps or activities in the application, service, or claims processes where they feel frustration. This data enables insurers to prioritize ‘fixes’ to their workflows that will accelerate client interactions and increase satisfaction.

Data makes insurance policy approvals quicker and less painful

#

Data plays a crucial role in expediting insurance policy application approvals, resulting in more efficient processes for both insurers and customers.

To enhance the customer experience during the approval process, insurers have streamlined workflows. They strive to automate underwriting to achieve straight-through-processing (STP) that creates a faster and more seamless application experience. These improvements are made possible not only by accessing and leveraging their own organizational data but also by integrating data from diverse third-party sources. Client data is combined with information from public and private sources, such as census reports, prescription histories, medical records, as well as reinsurer expert systems, credit scoring data, etc...

As a result, applications that used to take weeks to underwrite can now be decided within days, or even minutes. Many manual interactions, like medical exams or phone interviews, that traditionally slowed down the application process and negatively impacted customer experience, are no longer necessary. The utilization of data, streamlined workflows, and automated processes have created significant improvements in efficiency, reduced underwriting costs, and enhanced the overall experience for policy approvals.

Chart from the Equisoft and Celent Research report titled, “Getting Value from Data” that showcases the top ways life insurance carriers are getting value from their data today.

Carriers are leveraging data to enhance visibility into processes and operations #

The ability to access the right data in real-time for analytics purposes gives insurers clear insights into the effectiveness of their processes and operations. This, in turn, enables quicker and more effective decision-making, empowering carriers to optimize their operations and drive efficiency across their organization.

Real-time access to relevant data provides increased visibility into cost tracking, growth rates, and profitability, enabling more informed decision-making.

Operational effectiveness and workflow tracking data is also very valuable. Particularly in policy management, significant improvements can be achieved by tracking metrics such as completed items, time spent on tasks, number and timing of recent customer interactions, workflow progress, task attempts before completion, individual task completion rates, and departmental task volumes.

Carriers are leveraging data to build actuarial pricing models and automate underwriting decisions #

Carriers are leveraging data to build actuarial pricing models and automate underwriting decisions. By accessing their own organizational data and incorporating third-party sources, carriers gain insights into the risks they underwrite, allowing for optimized pricing strategies and an automated acceptance of certain risks.

Chart from the Equisoft and Celent Research report titled, “Getting Value from Data” highlights pricing and customer experience as the foremost priorities for leveraging data and analytical technologies.

Data integration from various public and private sources, such as census reports, channel and process for submission, prescription histories, and medical records, enhances underwriting and pricing capabilities. Carriers can explore new underwriting models by leveraging existing policy data and offer instant approvals on certain risks without medical requirements.

Pricing is crucial to meeting customer demands and differentiating your company in the competitive industry. Building actuarial pricing models by aggregating data from quotes to claims and capturing a holistic view of customers, including lifestyle and biometrics is the ultimate goal. The ability to interpret this data and create predictive models enables carriers to test hypotheses, optimize strategies, and make intelligent underwriting decisions even with limited evidence.

Carriers are leveraging data to help accelerate claims management and settlement #

By harnessing their data, carriers are able to streamline claims management and settlement processes in several ways:

- Automate Fraud Detection: By analyzing data patterns and anomalies, carriers can quickly spot and investigate fraudulent claims, reducing the overall time and resources dedicated to fraudulent cases.

- Predict Claim Volumes: Data analysis enables carriers to predict claim volumes based on historical patterns, seasonal trends, and other relevant factors. Carriers can anticipate demand and allocate resources accordingly, which ensures more efficient handling of claims and minimizes delays.

- Accelerate Claim Submission: By streamlining the collection and analysis of claim-related information, carriers can expedite the evaluation and settlement of claims. This reduces processing time, helps detect fraudulent claims, and helps speed up payments.

Challenges preventing insurers from realizing the full potential of their data #

In the Getting Value from Data, Celent, and Equisoft Report, we talked to Life Insurance CIOs about how they derive value from data and where they face challenges. Their responses were revealing about the state of data utilization in life insurers:

- “We need data we can trust.”

- “No one is in charge of data quality. We have pockets of greatness surrounded by swampy moats. We put it in the cloud and are sorting it all out there.”

- “Untrustworthy data keeps leadership from confident use in customer-facing contexts.”

- “The lights went on – we were running a multi-billion-dollar company with a rat’s nest of interlocking spreadsheets – it was worse than COBOL.”

These comments speak to both the importance of data to insurance organizations and the challenges companies still face in trying to maximize its potential.

Interruptions in the seamless flow of data impair the efficient and automatic completion of basic, daily processes in life insurance organizations. These blockages require manual intervention. They create double-entry waste. They prolong the tyranny of paper.

Frustrations rise. Costs escalate. In a time when tight margins demand a focus on increased efficiency, data deficits, and bottlenecks create problems that disable a product’s value proposition, annoy clients, and lead advisors to look for better solutions.

And, even worse, data stagnation prevents carriers from realizing the tremendous benefits that would come from increased use of data analytics and emerging AI applications. Carriers are data-driven businesses—and yet lack ready access to all of the client, policy, and advisor information required to innovate and deliver maximum value to their customers.

Data holds immense potential for generating value within an enterprise. However, there are inherent challenges in accessing and effectively harnessing the vast amount of data stored across multiple internal systems.

The Global State of Data Maturity #

The Assessing Data Readiness for AI in the Life Insurance Industry report highlights several barriers that hinder insurers from fully leveraging their data. While insurers globally rate as 'progressive' in data readiness maturity, significant challenges persist. Regional disparities reveal critical pain points: Australia, though leading with the highest maturity score of 72, still struggles with Quality & Integrity, which was a common challenge across all regions. In North America, smaller organizations and complex enterprises face hurdles in scaling data capabilities, while Latin America excels in infrastructure but struggles with consistent data quality enforcement. Canada, despite strong infrastructure, has the lowest regional score, underscoring the challenges in sourcing and integration. These obstacles, combined with gaps in data governance and enterprise-wide alignment, limit insurers’ ability to maximize the transformative potential of AI and modern analytics.

Legacy policy admin systems create data silos #

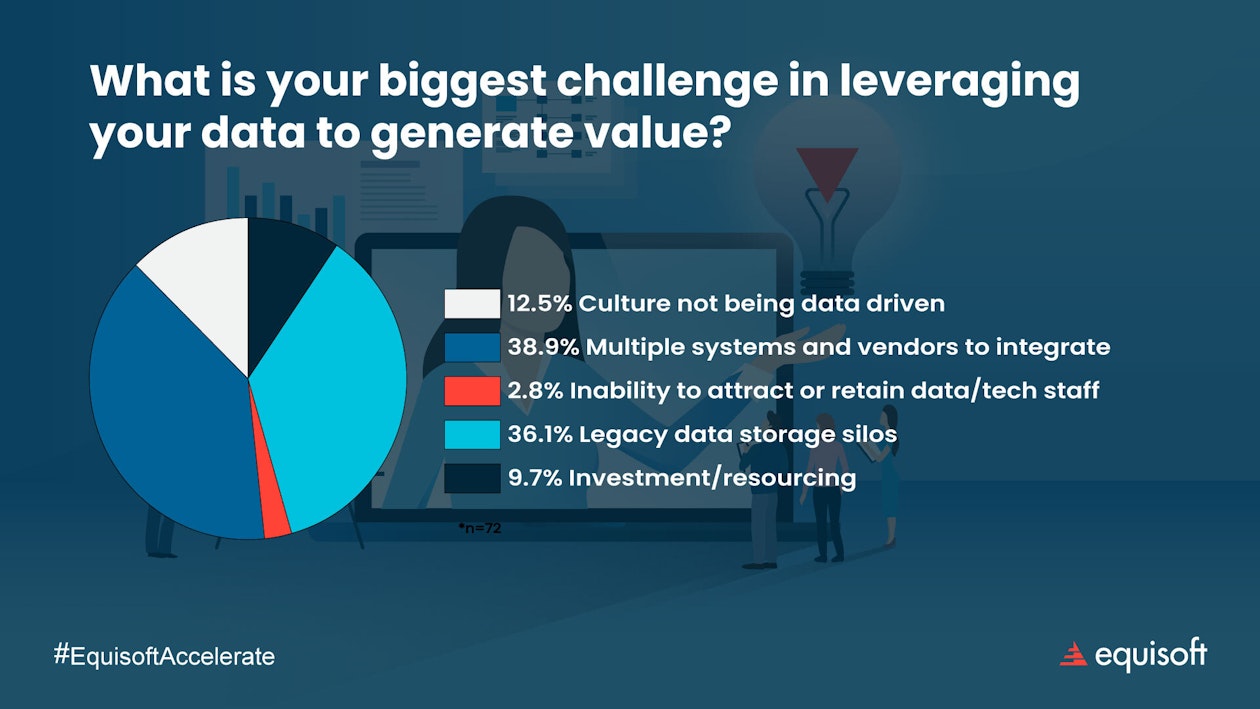

In an Accelerate Webinar on How North American Insurance Execs Are Leveraging Their Data Today, we asked attendees to rank their biggest challenge to leveraging data. Most execs were concerned with the need to integrate multiple systems and vendors and the impact of legacy data storage silos.

Legacy policy admin systems make it difficult for insurers to leverage their data effectively. These systems lack the compatibility and integration capabilities required to seamlessly connect with modern data use cases. A major obstacle lies in the fact that numerous legacy systems lack support for application programming interfaces (APIs) that facilitate data sharing. This makes it difficult to extract data from these systems and integrate it with modern platforms and tools.

The age of many of these legacy systems creates data challenges as well. Data formats remain static in aging platforms and over time may no longer be usable by modern solutions. Even worse, in most companies, large percentages of the data stored in legacy systems is not usable in modern contexts. This ‘dark data’ makes true transformation with digital applications extremely difficult and costly, unless it is extracted and transformed.

In addition, many long-standing insurers are currently running their operations on multiple systems. Over time, old systems may be upgraded or patched to enable increased functionality. New systems (externalized processing) maybe be added to accommodate changes in business processes or fulfill new and unique data requirements or through merger and acquisition activity (when there is not enough time to adequately support all required features). These varied systems that make up the increasingly complex IT landscape often perform duplicate functions (store redundant data).

If system consolidation doesn’t happen, insurers end up with multiple policy administration systems (PAS) that have only slight variations in capabilities and perform similar tasks (such as billing, collection, administration, and account valuation). Not only does this duplication increase technical overhead, but it can also create an archipelago of data islands that isolate data from the wider technology ecosystem.

When software and processes lack standardization, organizations find themselves dealing with a "vendor stew" where data is duplicated and/or has evolved as the organization carries out various pilot projects, proof of concepts (POC), and internal applications. Consequently, data often remains stuck in spreadsheets, and isn’t captured or tracked properly.

As these systems diverge and change isn’t coordinated or recorded, determining the true source of information or the core set of facts becomes difficult.

With information fragmented across various systems and departments, it becomes difficult to draw connections or gain a holistic view of customers, policies and claims. Each department may have its own data repository in which vast amounts of data have been collected over a period of decades. But if the information can’t easily be accessed in real-time and migration is not pursued, the value of that data to the enterprise is greatly reduced.

Poor data governance leads to data bias #

Life insurance companies face a critical issue regarding the relevance of the vast volumes of data they possess. Collecting accurate data is essential for understanding customer expectations and delivering an exceptional customer experience. But, bias can inadvertently be introduced into decision making because of old data collection practices that reflect inappropriate attitudes. Collecting the right data is crucial to address diversity and mitigate biases. Collecting accurate data is essential for understanding customer expectations and delivering an exceptional customer experience.

Eliminating data bias requires a comprehensive analysis of the entire data collection and the way an organization uses it. Every step, from collection to analysis, needs careful review to ensure that the data points are relevant. For example, underwriting and claims processes should be examined to identify any indirect discrimination or perpetuation of systemic racism through life insurance policies.

Additionally, consideration should also be given to discarded data—was this data discarded because it is truly unnecessary, or because someone thought it was unnecessary? By addressing data biases and ensuring inclusive data practices, insurers can create a more equitable and customer-centric environment.

Data collection practices are poor and impact customer satisfaction and brand value #

The way a company collects customer information is crucial and can significantly impact the customer experience and perception of the insurer’s brand.

In an Equisoft Accelerate webinar titled "How to Build Data Foundations for a Digital Insurance Future," J.D. Power presented a study that highlighted a specific insurer's application process. The research revealed that, because the insurer housed disconnected solutions, a customer was asked for their name and address 10-12 times from quote to application. This is a huge source of frustration and inconvenience for potential customers. In fact, the research found that one of the main reasons consumers drop out of the sales process is because they are asked to repeat information in the application. And the study also showed that these frustrations with repetitive data gathering led to a negative impact on their perception of the carrier’s brand.

Organizational structure and culture don’t support the vision for a data-driven enterprise #

Research conducted by Equisoft in collaboration with Celent revealed that the biggest data challenge faced by life insurance CIOs is organizational culture.

Chart from the Equisoft and Celent Research report titled, “Getting Value from Data” that showcases the top challenges life insurance carriers face with data and analytics.

While each company's unique circumstances shape their approach to leveraging data, companies often face cultural obstacles on their path towards becoming data driven. Organizational challenges insurers face include:

Buy-in on strategic data initiatives is not sought from all stakeholders

Another reason data migrations fail is because of insufficient stakeholder buy-in. To minimize risk and ensure a smooth data migration process, it is crucial to secure the support and buy-in of all executives who will be impacted. This includes engaging people who are directly affected by migration planning, resource allocation, and change management. It is especially important to garner support from stakeholders who possess valuable subject matter expertise and will be required to allocate their top talent to the project. By obtaining their commitment, the risk exposure can be effectively reduced right from the onset.

Organizational structure doesn’t support enterprise-wide data decision-making

As an organization grows its data-driven capabilities, the level at which data leadership resides will also change. At the beginning of data maturation, leadership usually sits with subject matter experts (SMEs) at project or department levels. As the company’s data capabilities grow, responsibility for data moves up the org. chart to more senior positions such as VP of Data Solutions and Chief Data Officer so that decisions can be made about data governance on an enterprise-wide basis. Ultimately, in some companies there is even a data-focused role on the company's Board.

The overarching trend is to embrace comprehensive, integrated planning that encompasses all facets of the business. But for many companies this ideal has not yet been realized, meaning data is managed within silos and is perhaps governed differently across projects or departments.

Legacy attitudes towards data aren’t changed as part of modernization #

As companies work towards becoming data-driven insurers, change management becomes an increasingly important issue. The old ways of collecting and managing data, as an input rather than a strategic asset to be leveraged, needs to be overhauled. Historically speaking, insurers were “data minimalists” in their approach to collecting data because technology and processes designed were slow and cumbersome, therefore the smaller the dataset entered led to faster throughput in the application process and speed to issue the policy. Today however, leadership needs to set data governance standards, identify opportunities and set priorities for data initiatives. Staff in all areas will need to adapt to embrace new processes that rely on data and the organization will need to plan for training, support and management to facilitate the changes.

Effective change management includes ensuring the buy-in and support of key stakeholders early on, clear and consistent communication, managing resistance to change, and helping employees develop the required skills and competencies to maximize the value of new data capabilities.

The full potential of system modernization and data migration cannot be fulfilled unless staff leave behind old attitudes towards data and embrace its ability to bring rigor to decision-making and accelerate processes that have been causing friction for decades.

Legacy system experts are retiring

As subject matter experts responsible for maintaining legacy systems approach retirement age, there is a growing concern about the loss of institutional knowledge. The workforce is aging, and critical staff members who have the experience and expertise in older systems are retiring. Additionally, new hires are not familiar with the old programming languages and hardware platforms used in these legacy systems. The retirement of these legacy system experts leads to a loss of institutional knowledge about the data itself, prior company processes, product features, and workarounds within the systems. Additionally, it leads to a knowledge gap regarding the location of data, its format, values, and the original setup of products.

Common reasons why data migrations fail #

Migrating life insurance data to a new policy administration system can present several challenges due to the complex nature of insurance data and its importance to the operation of the business. If data is missing, lost or corrupted, it becomes unavailable for any length of time and the impact on the organization will be severe.

Potential data migration risks include:

Lack of Data Migration Experience #

Data migrations are not common. These projects, associated with legacy system modernization and consolidation of core systems, may happen once or twice in a generation. So very few IT staff have experience with successfully navigating a migration project.

A major reason that data migrations fail is because they are driven by people who do not have the required business and systems knowledge to execute the project properly.

Leaders and staff who lack migration experience and expertise create risk in a conversion project because of a lack of understanding of the best methodology to use, the best timing on when to start, the correct tools to ensure a quick and accurate migration, and also a lack of familiarity with the types of problems that may arise during any complex migration.

These risks can lead to project delays, increased costs and, without proper back-up, iterative processes and testing, even data loss.

Often, data migration leaders focus their efforts on precise upfront requirements that cannot be attained. This varies from expectations surrounding the timeliness, cost, resource-allocation and result of the project. Most frequently, the source system is large and complex, and the skillset needed for the requirements analysis is lacking.

Since data migrations are rare projects, experienced internal resources are scarce. This means that even though requirements and expectations are set at the outset, many requirements are also discovered mid-project, and these new requirements impact the design and implementation. This may lead to frustration and overruns, as projects end up taking longer than expected, and being more costly than expected.

Lack of informed planning for a data migration #

When project leaders and staff have little experience with data migration it is difficult to accurately foresee potential problems along the critical path of a conversion project. And it also becomes difficult to create a project plan that is achievable. Even seemingly simple decisions like “when do we start” become challenging when laying migration out in conjunction with the MANY moving parts of a modernization effort.

As a result, it’s common in the industry to overlook or underfund data migration as part of a larger modernization project. In some cases, because of the lack of pre-planning, insurers attempt to handle data migration internally, relying on existing tools and inexperienced staff. This creates a significant amount of risk. Alternatively, many companies opt not to undertake data migration at all, perceiving the associated risks to be too high.

Common issues related to inaccurate planning can include:

Human resource risk:

Without data migration experience, it is difficult to accurately forecast the resourcing impact of the project on day-to-day operations. Sometimes, organizations will decide against a migration because of the fear that the project will occupy IT staff for months, putting other initiatives at risk.

Downtime and Business Continuity:

Minimizing downtime during migration is essential to avoid disruptions to business operations. Planning for a smooth transition and ensuring business continuity during and after migration can be challenging.

Legacy System Decommissioning:

Properly retiring the old policy administration system after migration requires careful planning. Archiving data, ensuring data retention compliance, and making sure no critical data is lost during the decommissioning process are important aspects.

Data risk #

Insurers worry that migrating their existing blocks of data could result in data corruption, loss, inability to properly cleanse old data, and having to run parallel systems with old and new business blocks.

During a data migration, challenges that should be anticipated and addressed include:

Data Complexity and Heterogeneity:

Insurance data can be highly complex, with a wide range of policy types, clients stored in many places, coverage options, policy roles, underwriting details, and more. Different lines of business and different systems have different data structures, making data extraction and transformation more challenging.

Data Volume:

Insurance companies typically deal with vast amounts of data, and migrating all this data while ensuring minimal downtime and disruptions can be a significant challenge. Handling large data volumes efficiently requires careful planning and optimization.

Data Quality and Integrity:

Accurate and consistent data is crucial in the insurance industry. Data quality issues, such as missing or incorrect data, can lead to errors in policy administration, claims processing, and customer service. Ensuring data integrity during the migration process is essential.

Data Mapping and Transformation:

Mapping data from the old system to the new system's data model can be complex. Different systems may use different terminology, codes, or structures. Transforming data to match the new system's requirements while preserving its meaning and accuracy can be a time-consuming and an error-prone process.

Data Migration Best Practices #

Due to limited data migration experience and expertise, many companies face potential data risks when attempting an in-house conversion. Even during the planning stage, organizations tend to perceive data migration as an IT project rather than recognizing it as a strategic business initiative. Consequently, they frequently underestimate the magnitude of the task at hand, which can span multiple years, require substantial change management, and impact various functional areas throughout the company.

The best migration solutions address all of an insurer’s perceived challenges and risks. They ensure quick and effective migration so that insurers can retire their old systems and move forward on their modernized technology as soon as possible. Below are the best practices to consider in order to ensure an effective migration of all your data.

Solve the Data Migration Resource Problem: Choose a vendor with experience and expertise #

Companies that lack in-house data migration experience and expertise will need to engage a company that specializes in data conversion to complete their project. This type of vendor, if chosen wisely, can become a key partner in an insurer’s digital transformation and remove much of the risk from migration initiatives.

During the vendor selection process, insurers should look for vendors with years of experience doing data migrations to and from life insurance Policy Administration Systems, as well as assess the vendor’s data migration methodology. It is important to ensure that the selected vendor has experience with both the legacy and new policy administration system, enabling them to anticipate and solve for potential challenges.

During the selection process, insurers should be wary of inflated vendor claims. Validating experience and expertise is critical, and asking the right questions will often reveal realities vendors typically try to mask‒ like accuracy metrics, number of migrations from your type of source system, etc. At the end of the day, a vendor is a long-term partner, and vendor selection will play a key role in the success of your business.

One important thing to look out for when selecting a vendor is the type of team they choose to put together. Whereas most vendors will select a team of 10-15 people with generic system knowledge and limited migration knowledge, an experienced vendor will look to minimize the size of the team but maximize the expertise each member brings to the table. This includes personnel that can bring forward the appropriate legacy system and business knowledge so that the migration plan and process is effective.

Solve the System Problem: Choose the best data migration tools and methodology #

Proven, leading-edge tools specifically tailored for data migration empower business experts to take control of the process right from the beginning, rather than depending solely on IT and involving the business unit at a later stage.

Insurers should look for tools with a streamlined data conversion system that simplifies the development and QA processes, including file management associated with the migration and the necessary transformations. Ideally, all business transformations should be consolidated within a single location within the tool. This is in contrast to traditional extract, transform, and load (ETL) processes, where transformations are scattered across multiple locations, making them difficult to test, track errors, and result in a slower workflow.

Efficient data conversion tools should facilitate early testing to uncover the unknowns earlier in a migration project and simplify error management. They should have the ability to intake the data requirements of the new target system. This enables the seamless integration of these inputs into the conversion system, streamlining the overall process.

Types of tools in a data migration tech stack:

- At the center of the migration tech stack should be a data transformation rules engine‒a multi-purpose migration tool that embraces legacy technology and proprietary data formats.

- A data discovery tool that identifies target system data attributes and automates key repetitive migration tasks.

- Migrations require robust data reconciliation and reporting functionality. The best migration reporting tools expedite the transformation, QA and testing processes. The report output is essential to rapidly iterating to refine transformation logic. The report content should be easy to use and share.

Future Data Use Cases: AI, Generative Language Models and Analytics #

Following best practices will make more of the organization’s data available in real-time for use in emerging new solutions, enable more automation, better product design, enhanced CX and improved decision-making. The rapid advancement of tools and technology, namely in AI, the IoT, automation and analytics present major opportunities for life insurers.

Big Data #

What Is Big Data and How Can It Empower Insurers to Deliver More Personalized, Efficient, and Customer-Centric Solutions?

Big data, characterized by high volume, velocity, and variety, has revolutionized the life insurance industry by enabling insurers to harness vast datasets for actionable insights. Traditionally reliant on historical data like mortality rates and consumer behavior, insurers can now leverage advanced analytics to process information more efficiently and precisely.

This transformation empowers insurers to personalize customer offerings, optimize risk assessments, and streamline operations. By integrating data from IoT devices, social media, and financial records, insurers gain a holistic view of customers, fostering tailored services and proactive engagement. Big data also enhances fraud detection, automates processes, and improves customer satisfaction. As insurers continue to navigate challenges such as data integration and compliance, big data remains a pivotal tool for delivering innovative, customer-centric solutions.

APIs

What are APIs

API stands for Application Programming Interface. An API is a set of rules that allows different software applications to communicate with each other. APIs define the methods and data formats that apps will use to request and exchange information, services, or functionality from other software components. APIs enable communication and data exchange between different software systems, both internal and external. They act as bridges between back, middle and front office systems, and this improves efficiency, accelerates innovation, and optimizes customer experiences. APIs connect the systems that power each stage in the policy life cycle. They automate steps in processes, accelerate decision-making, and are the building blocks of most modern PAS available on the market today.

Common uses of APIs in life insurance, include:

- Quote and Application APIs: Carriers will provide brokers with APIs that enable them to request real-time quotes. They take into account various underwriting parameters (age, health, lifestyle, etc.) and quickly produce quote comparisons, speeding up the application process.

- Underwriting APIs: Carriers use APIs to access databases to gather information that determines the insurability of an applicant and determine premium rates.

- Policy Management APIs: These APIs enable insurers to provide self-service portals where agents and clients can make real-time changes to policy information like address, beneficiary, and personal details.

- Payment Gateway APIs: These gateways enable secure payment of premiums through credit cards, electronic transfer and mobile payment methods.

- Claims Processing APIs: APIs are used to speed the collection of claims data and communication between the carrier, client and third-party service providers. They accelerate the claims process and make it more transparent for the customer.

- Data Verification APIs: These types of APIs are important because they enable carriers to connect to external providers to verify medical or credit information.

- Analytics APIs: A growing use of APIs is to connect to BI and analytics tools that provide insights into market trends, customer preferences, and shifting risk patterns.

Data Integration #

Data integration as a technology is simply a means to pass requests and responses for information between two disconnected sources. In our context around modernizing policy administration, it can be used as a method of accessing data on legacy systems to present it to many modern uses without performing a data migration. The process can be ‘one-way’ or ‘bi-directional’. In a one-way scenario, data can be sourced from a policy admin system, for instance, and delivered to a variety of customer experience uses on the web or through mobile applications to be viewed by various actors in the customer experience. As part of the process, the data would be cleansed and formatted to match the requirements of the receiving entity.

If the customer made a change to their data on the portal, then the process would be reversed, and the updated data would be configured back to its original format and returned to the PAS.

This approach is ideal for making data from a multitude of legacy systems available to new sales and service solutions. IT avoids the time and cost of a data migration but enables companies to work with existing books of business. It is a good complement to Greenfield modernization approaches which stand up products on new PAS and then begin putting new business on the system, leaving the old PAS untouched.

Artificial Intelligence and Generative Language Models #

Chart from the Equisoft and Celent Research report titled, “Getting Value from Data” depicts the top data-dependent technology carriers currently value.

The use of AI and generative language models, such as ChatGPT, is rapidly expanding within the life insurance industry. These technologies are finding applications across a spectrum of functions, including but not limited to claims adjudication, new product design, pricing strategies, risk evaluation, and real-time customer support

AI and generative language models are trained on extensive datasets, drawing from large information pools. These technologies are inherently driven by data, and they operate in direct proportion to the quality and quantity of data they receive. The excellence of outcomes produced by AI and GL models is dependent on the quality and quantity of the data fed into their systems. More data means higher quality insights with greater accuracy. High data accuracy and quality means insurers can ‘trust’ the data reports they receive, and that they feel confident making decisions based on the information being generated.

The Internet of Things

Almost everything, from household appliances to wearables to monitors on cars and bikes, now generate vast volumes of data that is being collected in real time. The IoT and the interconnection of databases through APIs is exploding the amount of data companies have access to. However, to get to the point where companies can make confident and strategic decisions using insight from data, they need to maximize access to their data and ensure it is secured, aggregated, standardized, normalized, and “fit for use” by their AI and analytics tools.

What opportunities does data offer as fuel for AI and GL and analytics and IoT? #

The future of AI, generative language models, analytics, and data from the Internet of Things (IoT) in the life insurance industry is full of potential. AI and alternative data sets allow insurers to uncover risk correlations that may elude human observation. This presents a tremendous opportunity to enhance operations by boosting efficiency, reducing costs, and improving the customer experience.

These technologies will continue to shape the way insurers operate, offer products, and engage with customers.

Some future use cases for AI, generative language models, analytics, and IoT data in life insurance include:

- Personalized Insurance Products: AI-driven analytics and generative language models can large vast amounts of customer data, including IoT-generated data, to create highly personalized insurance products that cater to individual needs, lifestyles, and risk profiles.

- Dynamic Underwriting: Insurers can leverage real-time data from IoT devices, such as wearables and health monitors, to provide more accurate risk assessments and fairer pricing. This data can be used to adjust policy premiums in relation to policyholders’ behaviors and conditions.

- Real-Time Customer Engagement: AI-driven chatbots and virtual assistants can interact with customers in real-time, answering questions, offering policy recommendations, and providing personalized customer support.

- Predictive Analytics for Customer Turnover: Analysis of data from IoT devices and other customer interactions, can feed AI-driven predictive models which would identify potential turnover signals and enable insurers to proactively engage at-risk customers.

- Parametric Insurance: Data from IoT sensors could be programmed to automatically trigger insurance payouts if certain conditions are met. For example, if a life insurance policy were held by a deceased patient that had received a cancer diagnosis, the insurer would pay out the beneficiary without the need for a claim investigation.

- Life Insurance Underwriting Improvements: AI and analytics can process health data from wearables and health devices to streamline the life insurance underwriting process, making it faster and more accurate. For example, programs can already analyze social media posts and determine whether or not a person is a smoker.

These use cases demonstrate the potential for AI, generative language models, analytics, and IoT data to revolutionize the life insurance industry, enhancing customer experiences, improving risk management, and driving innovation in insurance products and services.

Assessing Data Readiness for AI as a Life Insurer #

The report Assessing Data Readiness for AI in the Life Insurance Industry provides comprehensive research that sheds light on critical barriers and opportunities for AI adoption. Despite life insurers ranking themselves highly in digital maturity, data challenges remain the primary obstacle to successful AI implementation. While AI holds transformative potential for enhancing customer experiences, improving operational efficiency, and driving growth, insurers must first establish robust data foundations.

Global Key Findings: The AI Challenge

While life insurers globally rank as ‘progressive’ in data readiness, nearly half still feel unprepared to implement AI solutions, with 78% identifying data readiness as the top obstacle to realizing AI’s potential. This paradox highlights several factors: insurers are a risk-averse industry, hesitant to declare AI readiness amid evolving requirements.

Data maturity often applies to specific, controlled use cases rather than holistic AI strategies across the value chain. Additionally, AI adoption frequently occurs in isolated pockets of innovation rather than enterprise-wide initiatives. Differing perspectives between C-suite executives, who focus on strategy and governance, and operational staff facing day-to-day data challenges, further contribute to the disparity. Addressing these dynamics is key to overcoming the readiness gap and fully unlocking AI's transformative power.

In this interview, Mike Allee, President of UCT, discusses how adopting a data-first mindset is essential for successfully implementing AI and solving challenges effectively.

Conclusion #

Data plays a crucial role across all aspects of the insurance value chain, including risk assessment, underwriting, product development, and claims management. Effective methods and tools for data migration and data integration enable insurers to unlock hidden value trapped in their legacy systems and better leverage the power of data analytics to extract practical insights, make well-informed decisions, and provide enhanced experiences to customers. By leveraging data, carriers can shape their strategies, improve operational efficiency, and optimize overall business performance.

While there are many challenges and risks associated with true modernization of the core administration of a company (data migration projects being a typical pain-point), having the right expertise on-board will help insurers navigate projects correctly, stay on-budget, have insight into the pitfalls before they happen, and in a timely manner. As carriers evolve their ability to access the vast array of data they possess, they will increasingly be able to impact every area of the business and create significant competitive advantage.